Researchers Uncover Striking Similarities Between Human and AI Learning Processes

- AI systems learn more like humans than previously thought, according to new brain-inspired research

- Flexible problem-solving in AI may depend on long-term learning, just like in the human brain

- Findings could reshape how future AI assistants are designed and trusted

advertisement

Introduction

Researchers at Brown University have uncovered compelling similarities between how humans learn and how modern artificial intelligence systems acquire knowledge. The study reveals that AI learning processes mirror the interaction between human working memory and long-term memory, offering fresh insight into both brain science and the future of intuitive, trustworthy AI tools. By examining how AI integrates flexible and incremental learning, scientists are beginning to understand why machines — and people — sometimes learn quickly and other times require extended practice.

How Humans and AI Learn in Two Distinct Ways

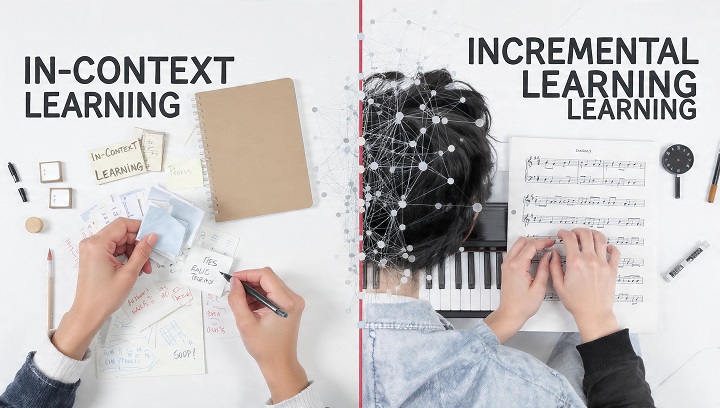

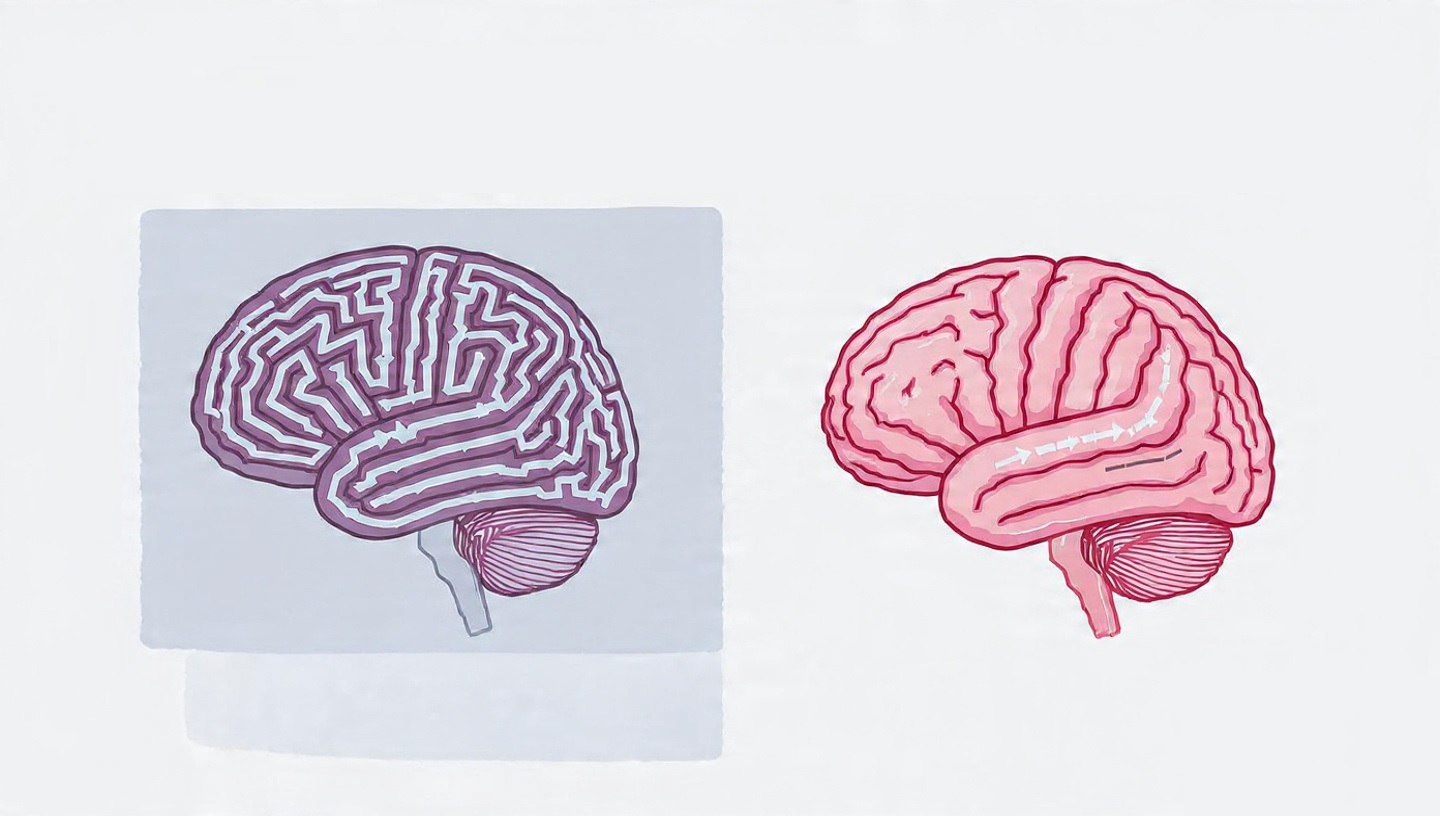

Humans typically learn using two complementary strategies. In some situations, such as learning the rules of a new game, people rely on in-context learning, which allows fast understanding from just a few examples. In other cases, like mastering a musical instrument, learning happens incrementally, improving gradually through repetition and practice. The Brown University research shows that AI systems also rely on these two learning modes, and more importantly, that the two interact in a structured way rather than functioning independently.

Memory Systems Offer the Missing Link

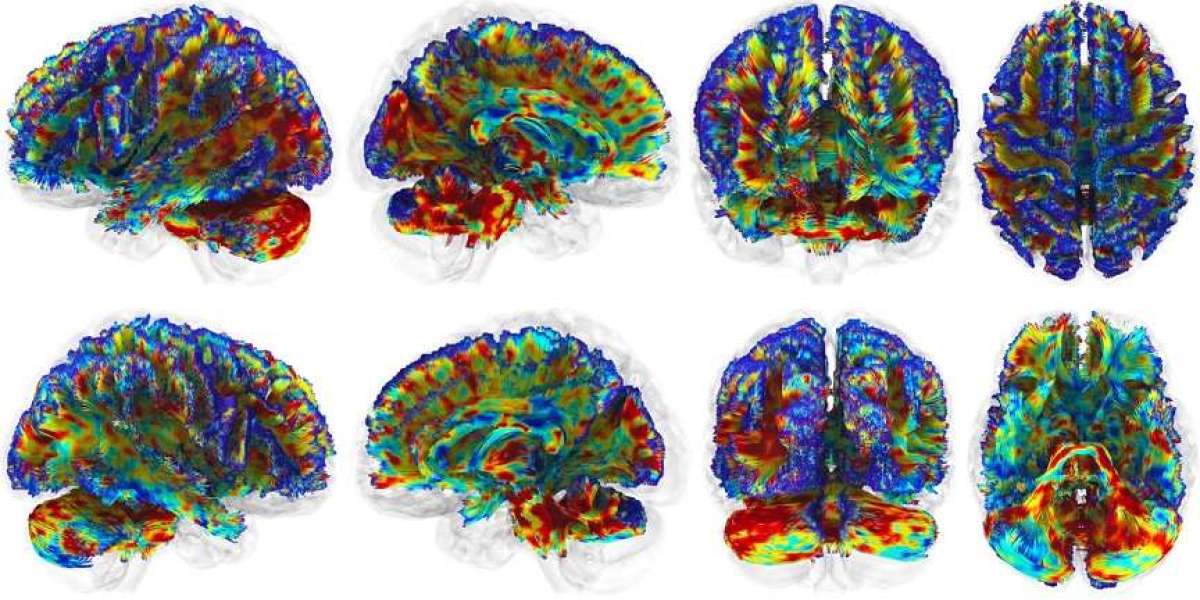

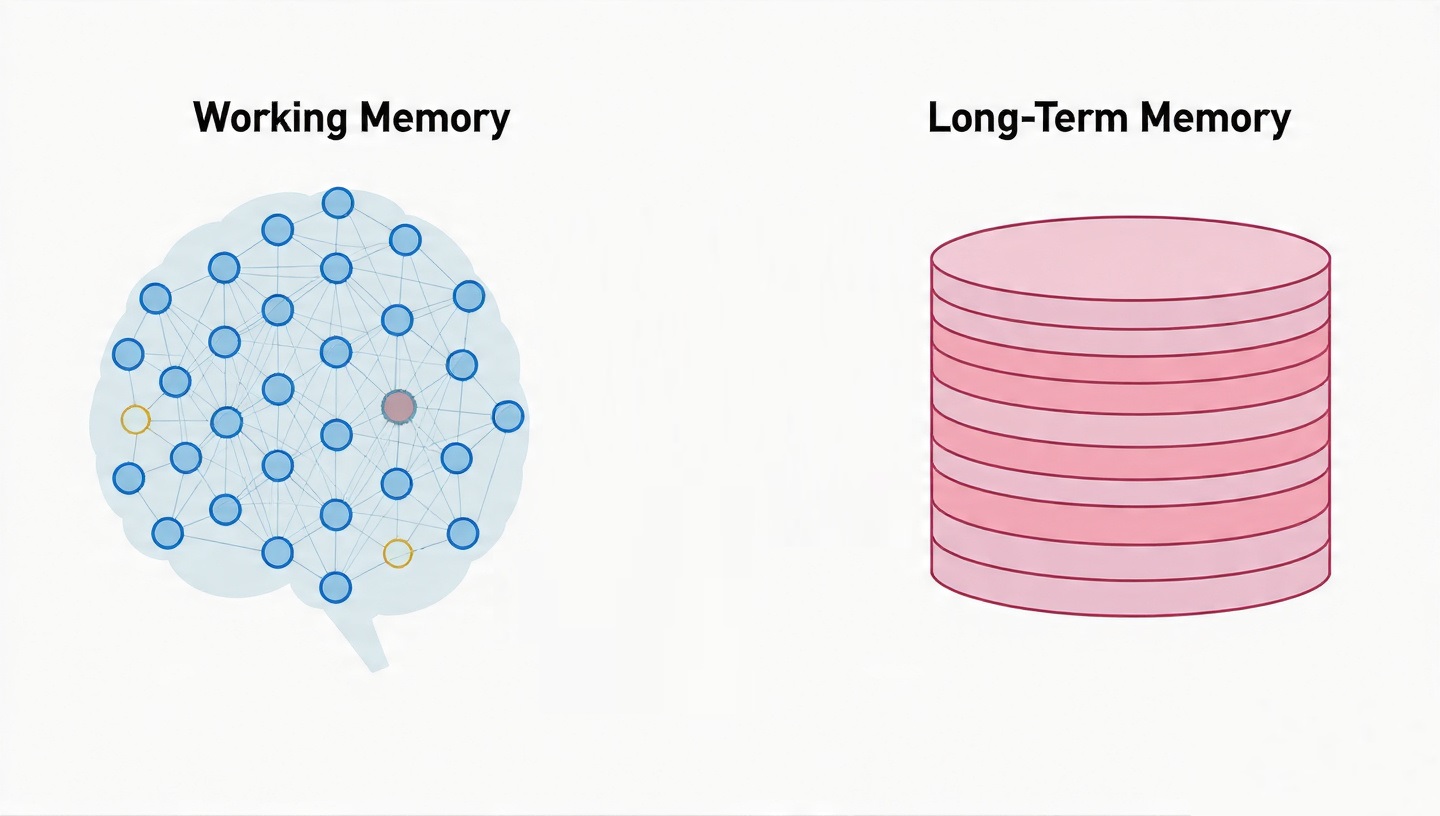

Led by computer scientist Jake Russin, the research team proposed that the interaction between AI learning methods closely resembles the relationship between working memory and long-term memory in the human brain. Working memory supports quick, flexible reasoning, while long-term memory stores knowledge built up over time. Using a training approach known as meta-learning — where AI systems learn how to learn — the researchers demonstrated that flexible, in-context learning in AI only emerges after sufficient incremental learning has occurred.

Experiments That Bridge Brains and Machines

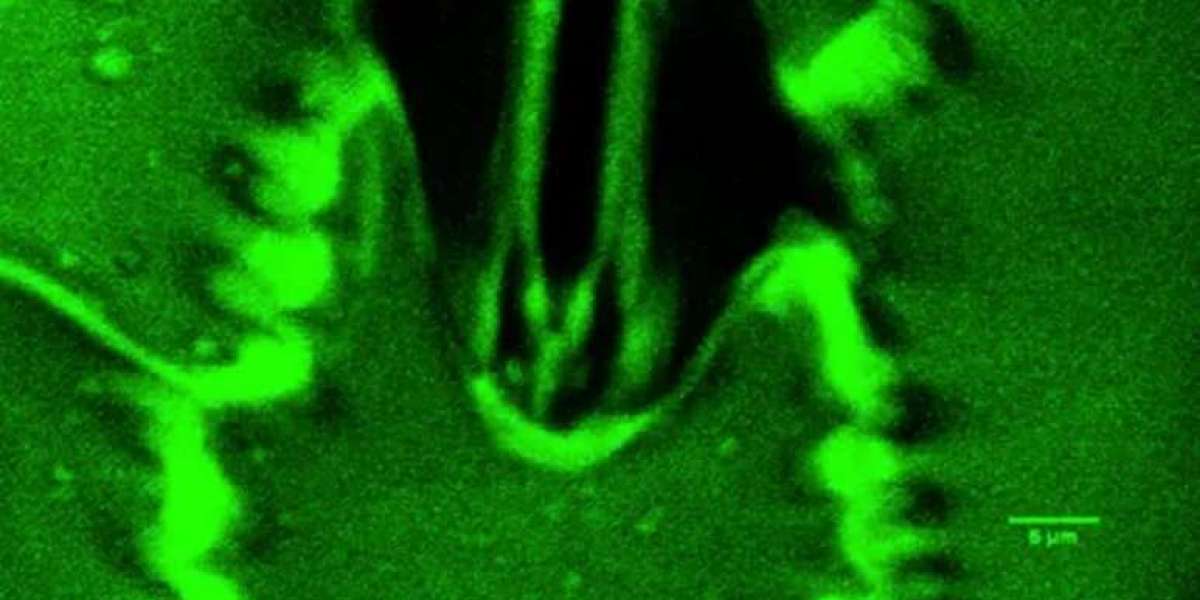

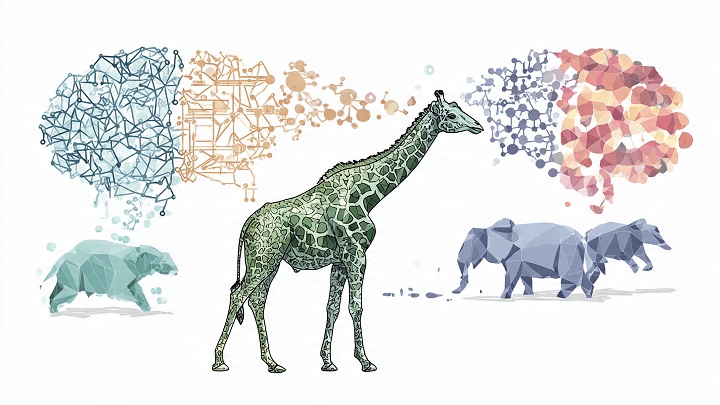

To test their theory, the researchers designed experiments adapted from human cognition studies. In one example, an AI system was trained separately on colors and animals, then challenged to recognize new combinations it had never seen before, such as a green giraffe. After completing roughly 12,000 related training tasks, the AI successfully recombined prior knowledge, demonstrating flexible reasoning similar to human learning. The findings suggest that both humans and AI rely on accumulated experience before they can generalize quickly to new situations.

Trade-Offs Between Flexibility and Memory

The study also identified a crucial trade-off between learning flexibility and long-term retention. Tasks that are harder and involve more errors tend to be remembered longer, while tasks learned easily in context promote adaptability but leave weaker long-term traces. According to cognitive scientist Michael Frank, this mirrors human learning behavior, where mistakes trigger deeper memory updates, while error-free learning enhances short-term flexibility without strongly engaging long-term memory.

advertisement

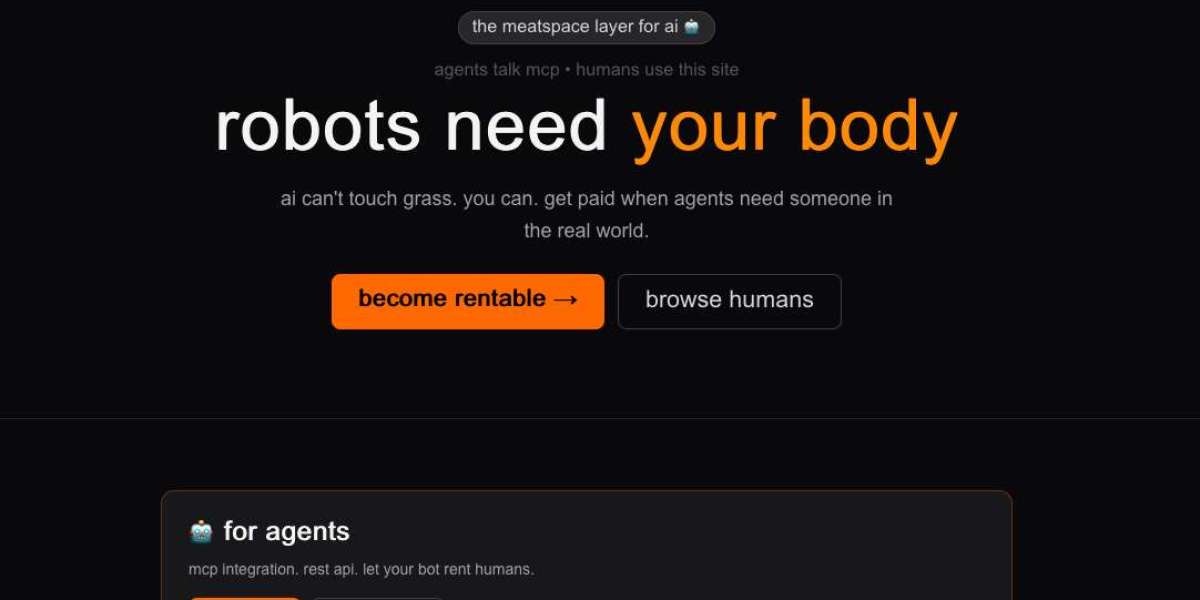

Why This Matters for the Future of AI

Beyond theoretical insight, the research has practical implications for designing AI systems used in sensitive areas such as mental health and personal assistance. Understanding when AI behaves like a flexible rule-based learner versus a slow incremental learner can help developers create systems that are more transparent, reliable, and aligned with human expectations. The findings also provide neuroscientists with a new framework for unifying previously separate theories of human learning.

Conclusion

This research marks a significant step toward understanding the shared foundations of human cognition and artificial intelligence. By revealing how flexible learning depends on long-term experience in both brains and machines, the study bridges neuroscience and AI development in a powerful way. As researchers continue exploring these parallels, the result may be AI systems that learn more naturally, adapt more responsibly, and collaborate more effectively with humans — not by replacing human intelligence, but by reflecting its core principles.

Key Points

Human and AI learning rely on both flexible in-context learning and slower incremental learning.

Flexible AI reasoning emerges only after extensive long-term training.

Learning trade-offs in AI closely resemble memory mechanisms in the human brain.

advertisement

Frequently Asked Questions (FAQ)

What is in-context learning in AI?

It is a form of learning where an AI system quickly adapts to new tasks using a small number of examples, similar to how humans infer rules.

What is incremental learning?

Incremental learning involves gradual improvement over time through repeated exposure and practice.

Why is meta-learning important?

Meta-learning allows AI systems to learn how to learn, enabling flexible reasoning after sufficient experience.

How does this research help humans?

It provides new insights into how human memory systems interact and may inform better educational and cognitive models.

What are the practical applications?

The findings can improve AI assistants, especially in areas requiring trust, adaptability, and human-like reasoning.

Sources

Brown University – Research on similarities between human learning and AI learning

https://www.brown.edu/news/2025-09-04/ai-human-learning

Thank you !