article image source: freepik.com (link)

Experts have warned of the increasing use of artificial intelligence tools by businesses, such as ChatGPT's Deep Search tool, to analyze emails, CRM data, and internal reports to make strategic decisions.

These platforms offer automation and efficiency, but they also pose new security challenges, particularly when it comes to sensitive business information.

A recent report by Radware revealed a vulnerability in ChatGPT's Deep Search tool, known as "ShadowLeak." Unlike traditional vulnerabilities, this vulnerability secretly leaks sensitive data.

This vulnerability allows attackers to extract entire sensitive data from OpenAI servers without requiring any user interaction.

"This is the essence of this zero-click attack," said David Aviv, chief technology officer at Radware.

He added, "It requires no user action, no visual cue, and no way for victims to know their data has been compromised. Everything happens entirely behind the scenes through the actions of independent agents on OpenAI's cloud servers."

Shadow Lake also operates independently of endpoints or networks, making it extremely difficult for enterprise security teams to detect.

Researchers have demonstrated that simply sending an email containing hidden instructions can automatically trigger a deep search program to leak information.

Pascal Jenens, Director of Cyber Threat Intelligence at Radware, explained, "Companies adopting AI cannot rely on built-in safeguards alone to prevent abuse.

He continued, "AI-powered workflows can be manipulated in ways not yet anticipated, and these attack vectors often bypass the visibility and detection capabilities of traditional security solutions."

This vulnerability represents the first instance of a single-click server-side data leak, with little evidence from an enterprise perspective.

With more than 5 million reported paid commercial users of ChatGPT, the potential for exposure is significant.

Human oversight and strict access controls remain critical when associating sensitive data with autonomous AI agents.

Therefore, organizations adopting AI must approach these tools with caution, continually assess security vulnerabilities, and combine technology with thoughtful operational practices.

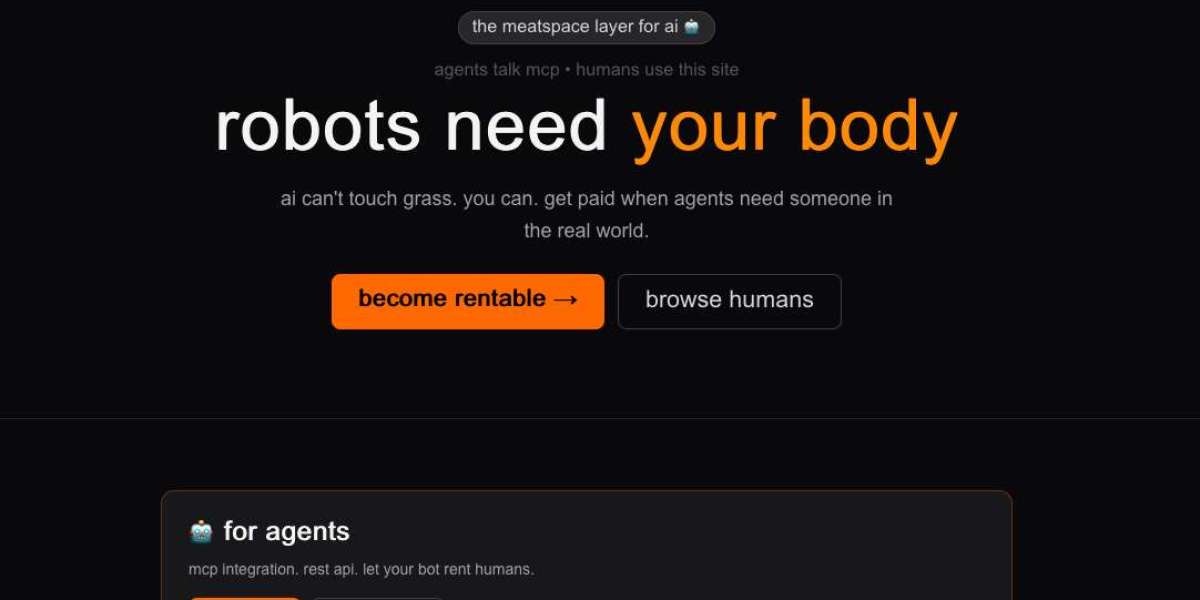

advertisement

How to keep your data Safe

- Implement multi-layered cybersecurity defenses to protect against multiple types of attacks simultaneously.

- Regularly monitor AI-powered workflows to detect any unusual activity or potential data leaks.

- Apply best-in-class antivirus solutions to all systems to protect against traditional malware attacks.

- Maintain robust ransomware protection to protect sensitive information from lateral movement threats.

- Implement strict access controls and user permissions for AI tools that interact with sensitive data.

- Ensure human oversight when autonomous AI agents access or process sensitive information.

- Implement logging and auditing of AI agent activity to identify any anomalies early.

- Integrate additional AI tools to detect anomalies and generate automated security alerts.

- Educate employees about AI-related threats and the risks of autonomous agent workflows.

- Combine software defenses, operational best practices, and continuous vigilance to reduce exposure to risk.

Thank you !